NLP Papers at ICML2022

layout: post title: NLP Papers at ICML2022 date: “2022-10-24” status: mathjax: true —

Co-training improves prompt-based learning for Large Language Models

Lang, Agrawal, Kim, Sontag. MIT

This paper proposes to co-train a LLM (GPT3) with a smaller Bert model.

Co-training (Blum & Mitchell 1998)1 has always seemed like a funny kind of iterative unsupervised method mostly based on intuition. It’s usually more compelling if you have two views of the same data which are complementary (e.g., tweet title and tweet image), and so one view benefits from the pseudo-labels gathered from the other view. Since we know how to simply combine two views of the same data in big neural land (multimodal neural networks), co-training isn’t very popular anymore.

Here instead of having two views of the same data, the authors use two models $\phi_0$ and $\phi_1$ applied to the same input. This starts to look more like a teacher student or model calibration setup rather than co-training “on different views”. They do justify this framing with recent work (see paper for references) which says that the views can be identical as long as $\phi_0(x)$ and $\phi_1(x)$ are different enough.

Background

The co-training framework. The high level idea is that you have two views of your data, A and B, you gather labels from A and use it to train a classifier on B. Use the new labels on B and try to train a classifier on A and iterate.

(Its easy to understand but if you’re new to it best with a video than me trying to explain it here.)

Method

GPT’s view The first view is $\phi_0^{(i)}(x) \in \mathbb{R}^{|V|}$ a vector of output probabilities on the vocabulary space using a very powerful model like GPT3. To restrict the vocabulary space, they take the top 25% of tokens with nonzero probability mass across all prompt examples. For $k$ prompts, the first view is a matrix $\phi_0(x) \in \mathbb{R}^{k \times |V|}$. Note that $k$ is not $k$ datapoints, but $k$ prompts on the same datapoint. They combine the $k$ prompts on the same datapoint into a single pseudolabel by using a calibration Matrix (Zhao et al., 2021)2, and train this calibration matrix $W$.

Smaller model view The second view is $\phi_1(x)$ is the second last layer where $\phi_1$ is a small pretrained model (they use Deberta).

Selecting Confident data.

This is a standard development in co-training to avoid the negative loops (wrong pseudo labels feeding wrong pseudo labels). They use model confidence based on the assumption that every class accounts for at least 1% of the data and a “cut-statistic”. The idea is to form a graph of datapoints connecting vertices who are nearest neighbours in $\phi(x)$, and consider an edge between the nearest neighbors cut if it has a different label from its nearest neighbors. If a node has a different label from its neighbors, we are less confident about this node. This seems to be a direct application of Zhang & Zhou 20113.

References

layout: post title: NLP Papers at ICML2022 date: “2022-10-24” status: mathjax: true —

Black Box Tuning for Language-Model-as-a-Service

Sun, Shao, Qian, Huang, Qiu. Fudan University

This paper works in the setting where big LM service providers (e.g. GPT3) work as black-box API services, and need to give users feedback on how to engineer their prompts without gradients. This is a compelling scenario, most people are already used to the idea that the big boys host the big models through APIs, something which will only become more prevalent.

Enter Derivative Free Optimization (DFO). DFO is not new, but DFO at scale is a problem because the existing algorithms “are known to suffer from slow convergence rate when the dimensionality of the search space is high. Thus, it is intractable to optimize even only the continuous prompts, which can be tens of thousands of parameters, using DFO algorithms.”

Despite hyping us up in the introduction, a fancy new DFO algorithm is not invented here and low dimensionality to the rescue!

The authors project the original prompt space using a random linear projection onto a much smaller subspace. This paper feels like a straightforward application and implementation of two well-known existing ideas (random projection to reduce dimensions + DFO). Although it is still certainly valuable as even though they are well-known in ML land, these ideas may not be that well-known in NLP land. Kudos to them as well for stepping out of gradient land.

Someone interested in applying or following up on this work should stare carefully at their many experiments and ablations as the method almost feels too simple to outperform gradient based methods (which they claim somewhere somewhere in the introduction).

Method

Step1: Write the loss function

Assume we have access to the logits $f(X, Y)$ so minimally we can compute some loss $\mathcal{L}(X, Y)$. We want to optimise some $p \in \mathbb{R}^D$ (prompt) which is combined with $X$. But it is intractable to do DFO on $p*=\mathrm{argmin}_{p \in \mathcal{P}} \mathcal{L}(f(p; X), Y)$, and so instead we want to optimise a lower dimension $z \in \mathbb{R}^d$. We can do this with a standard technique of using a random projection matrix $A \in \mathbb{R}^{D\times d}$ to project $z$ back to $D$ space.

\begin{equation} z^* = \mathrm{argmin}_{z \in \mathcal{Z}} \mathcal{L}(f(Az + p_0; X), Y) \end{equation}

Step2: Apply a standard DFO algorithm

The authors directly apply Covariance Matrix Adaptation Evolution Strategy (CMA-ES4; Hansen, 2016). The main idea behind this algorithm is that a population of new query solutions (offspring) is sampled at every step from the multivariate normal, where the mean and std of the multivariate normal are parameters updated based on likelihood of previous successes.

Tricks to make it work

- Sampling rows from He initialisation for the matrix $A$ appear to be better than sampling from normal distribution. No reason given why.

- Restricting the search space of $\mathcal{Z}$ to $[-5, 5]^d$.

- Using x-ent for the loss function instead of accuracy. This is ML 101.

- Instead of optimizing the entire prompt, they start with some initial prompt ($p_0$) and only do some small perturbation. $Z + p_0$

References

layout: post title: NLP Papers at ICML2022 date: “2022-10-24” status: mathjax: true —

Latent Diffusion Energy-based Model for Interpretable Text Modeling

Yu, Xie, Ma, Jia, Pang, Gao, Zhu, Zhu, Wu. UCLA

This paper combines a particular “interpretable” Energy based model, namely the symbol-vector coupling energy based model, with inference techniques from diffusion models.

It has a bunch of pre-requisites and can be intimidating to someone (me) unfamiliar with the literature. To understand what the author’s actual contribution is here you need to be quite familiar with the problem of learning EBMs, previous work and dedicate some time starting at before and after equations.

Background

First some high level background on EBMs. EBMs are a class of probabilistic generative models (like normalizing flows, variational auto-encoders, autoregressive models, and GANs). You can pretty much view anything neural under the lens of EBMs because it just means unormalised score model. EBMs explicitly parameterize the distribution’s log-probability function while ignoring its normalizing constant.

This gives flexibility when designing the energy function but comes at the (very high) cost of making likelihood computation and sampling generally intractable. Since we cannot compute likelihoods, we cannot do MLE training, so papers revolve around some way to approximate the ML gradient. For e.g., in the “old days” when Restricted Boltzman machines were introduced, people used to do MCMC to approximate the likelihood gradient which is typically slow.

More specific background

Let’s start with the model. They adopt the Learning latent space energy-based prior model. (Pang et al., 2020a)5 which is a symbol-vector coupling model where the continuous latent variables are coupled with discrete one-hot symbol variables. We have a symbolic one-hot vector $y \in \mathbb{R}^K$, and $z$ the latent continuous vector. $y$ and $z$ are coupled by an EBM $p_{\alpha}(y, z)=\frac{1}{Z_{\alpha}} exp(\langle y, f_{\alpha}(z) \rangle )p_0(z)$. Thus the generative model where $\theta=(\alpha, \beta)$ is

\begin{equation} p_{\theta}(y, z, x) = p_{\alpha}(y, z)p_{\beta}(x|z) \end{equation}

The whole point of this is to allow discrete structure, but also not sacrificing the generation quality. So we can use one representation for interpretability and another for performance. The ELBO that we want to minimise after marginalising out $y$ is

\[\begin{equation} \mathrm{ELBO}_{\phi, \theta} = \mathbb{E}_{q_{\phi}(z|x)} [ \log p_{\beta} (x|z) - \log_{q_{\phi}}(z|x) + \log p_{\alpha}(z)] \end{equation}\]Next, the learning algorithm. Gao et al., 20206 showed how to use diffusion-like methods to learn a sequence of EBMs. Each EBM is trained by maximising the conditional probability of the data given their noisy versions and intuitively, this is easier to learn because the distribution conditioned on the noise, is easier than learning the marginal likelihood objective. (Mathematically shown that $p(z_t | z_{t+1})$ is approximately a single-mode Gaussian distribution when the noise is sufficiently small.)

This Work

Instead of a vanilla VAE type framework, they instead consider the diffusion models. Recall diffusion models learn a sequence of EBMs by optimising conditional likelihoods which are more tractable than a marginal likelihood. So now instead of the joint distribution $p(y, z, x)$, we have the joint distribution of the trajectory of latent variables $p(y, z_{0:T}, x)$. Rewriting the ELBO, they end up with

\[\begin{align} \mathrm{ELBO}_{\phi, \theta} &= \mathbb{E}_{q_{\phi} (z_0|x)} [ \log p_{\beta} (x|z_0) - \log_{q_{\phi}}(z_0|x)]\\ &+ \mathbb{E}_{q_{\phi}(z_0|x)} [ \log \int_{z_{1:T}} p_{\alpha} (z_{0:T}) dz_{1:T}] \end{align}\]There is a lot more in the paper in terms of derivations and detailed algorithms. I feel like I oversimplified this work but I think that’s the main gist or the main premise of the paper, and the rest of it is scaffolding to make optimising this new ELBO work out.

References

layout: post title: NLP Papers at ICML2022 date: “2022-10-24” status: mathjax: true —

1.Controlling Conditional Language Models without Catatstrophic Forgetting

Korbak, Elsahar, Kruzewski, Dymetman. Naver Labs

This paper does not introduce a new problem or a totally new method, and (very) closely follows work by Khalifa et al., 20217 “A distributional approach to controlled text generaiton”. This formulates the problem of controlled text generation as a constraint satisfaction problem over the distribution $p$, where $p$ is the desired target LM. Understanding the previous paper is a strong pre-requisite, as this work’s contribution is in extending the unconditional generation method to conditional generation. An alternative title for the sake of our sanity would be “A distributional approach to controlled conditional text generation.”

Motivation

The problem is trying to control generation from pretrained language models without having a signal for fully supervised finetuning the entire generated sequence. The assumption is we only have an indicator $b(x) \in \{0, 1\}$ of whether the sample $x$ satisfies the control objective. E.g. compilable source code, or factually correct summaries. The other problem is catastrophic forgetting when any model weights are retrained.

Background

Khalifa 2021 “formalizes the problem of controlled text generation as a constraint satisfaction problem over the probability distribution $p$ representing the desired target LM.”

What do we want from $p$? First the constraints that we want are specified using $\mu_i$, where $\mu_i = \mathbb{E}_{x \sim p} \phi_i(x)$ of predefined feature functions $\phi_i(x)$ for $i \in \{1, \cdots, k\}$. Let $\mathcal{C}$ be the set of all distributions that satisfy the moment constraints. Then, $p$ is a distribution from this set but also minimizing the KL divergence from $a$ the original LM (to avoid catastrophic forgetting).

\begin{equation} p = \mathrm{argmin}_{c\in \mathcal{C}} KL(c, a) \end{equation}

How do we train the EBM P? In the special case of only pointwise constraints, where $\phi_{x} \in \{0, 1\}$, they prove $p(x) \propto a(x)b(x)$. This means the EBM is $P(X) = a(x)b(x)$. When the constraints are distributional, i.e., $\phi_x \in [0, 1]$, then $P(x)=a(x)e^{\lambda \dot \phi(x)}$, where the $\lambda$ can be solved using self-normalized Importance Sampling.

How do we sample from EBM? Since the EBM is an unnormalized distribution, we cannot directly sample from it. As a result, Khalifa train a policy $\pi_{\theta}$ to approximate $p$ from $P$ using the Distributional Policy Gradient algorithm (Parshakova et al., 2019)8. The algorithm works by minimising the x-ent($\pi_{\theta}, p)$ using importance sampling. One step of gradient descent looks like $\theta \leftarrow \theta + \frac{P(X)}{q(x)} \nabla_{\theta} \log \pi_{\theta}(x)$.

In this paper

We want a model that’s good at summarization, translation. These are all conditional generation tasks. Let’s say we deal only with the simple case of pointwise constraints where $P(x)=a(x)b(x)$. If we wanted to make the above conditional on say the source sentence $c$, we would have an EBM $P_c(x) = a(x|c)b(x,c)$.

How do we sample from this EBM? Recall we need to train a policy. But how do we train a single policy for the task, given all the different “contexts” in the training data? e.,g paragraphs before summarisation, or all the source sentences in translation. This work says that you should minimize the expected X-ent between $\pi_\theta$ and the multiple $p_c$, where the expectation is over $\tau(c)$, a distribution over $c \in \mathcal{C}$. The paper gives the final form of this gradient step as something complicated looking but if one follows the algorithm pseudocode it should be doable. There is however, an estimation of the normalization constant which might be expensive in a single gradient step, details in the paper.

References

layout: post title: NLP Papers at ICML2022 date: “2022-10-24” status: mathjax: true —

Generative Cooperative Networks for Natural Language Generation

Lamprier, Scialom, Chaffin, Claveau, Kijak, Staiano, Piwowarski. ISIR France

GANS are notoriously difficult to train for NLP applications because the reward signal is discrete (discrete word output). Because of the discrete signal, people use RL methods to optimize Language GANs which suffer from high variance and non-stationary reward distributions (Caccia et al., 2020)9. This paper hopes to change that by using a particular formulation of the generation step in the framework of Generative Cooperative Networks (e.g., SelfGANs10), which they claim avoids hacky learning schedules and has theoretical convergence guarantees.

If this actually works it’s really quite amazing because their target distribution takes on the super simple form of $q_t \propto p_{t-1}D_t$. I learnt alot from this paper because they didn’t seem to be taking any short cuts or hacks and even went above and beyond with MCTS. The paper also provides a nice discussion in relation to existing work.

Background

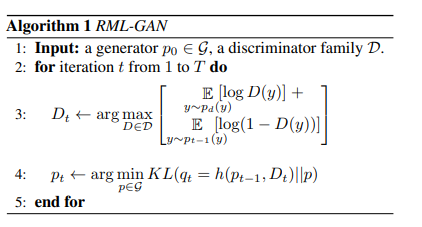

Generative Cooperative Networks are a class of GANs which uses a cooperative decoding scheme in which the discriminator network for e.g., scores beam search outputs to favor more realistic sequences. A unifying pseudocode for this class of networks is given by the authors:

Line 3 is a standard GAN Objective for training the discriminator.

Line 4 optimizes the generator distribution $p_t$ by minimising the KL divergence KL$(q_t||p_t)$, according to a fixed behavior distribution $q_t$ which is cooperative as it incorporates the discriminator $D_t.$

Reward Augmented Maximum Likelihood Training (Norouzi et al., 2016)11 proposes that we should have a generator distribution $q(x) \propto exp(\frac{f(x)}{\tau})$, where $f(x)$ consists of some reward function.

Method

Formulation of Cooperative Network. In this work, they propose to consider $f(x)$ as a cooperative (Discriminator+Generator) Network. $f(x) = \log (p_{t-1}D_t(x))$, with $p_{t-1}$ as the previous generator distribution and $D_t$ as the discriminator at step $t$ (trained on samples from $p_{t-1}$.

Appendix A, Theorem 2.1 and 2.2 give theoretical proofs on convergence which I didn’t try to follow.

Efficient Sampling Proving $q$ converges is not the end of the story, and we still need a way to sample from $q$. The authors consider nucleus sampling and mixture of sampling distributions, and also propose Monte Carlo Tree Search (MCTS). The main idea behind MCTS is that nodes in the search space gets selected and expanded, evaluated (with rollouts) and backprop to the selection phase based on the result of the rollout. The main trick here is using the discriminator to evaluate the node instead of employing a full rollout of the sequence.

References

layout: post title: NLP Papers at ICML2022 date: “2022-10-24” status: mathjax: true —

What Language Model Architecture and Pretraining Objective Works Best for Zero-Shot Generalization?

Wang, Roberts, Hesslow, Le Scao, Chung, Beltagy, Launay, Raffel. HuggingFace.

A massive compute intensive paper ( with “a total of 830,000 TPUv4-hours over the study”) from huggingface.

For model architectures they consider, Decoder Only, Encoder-Decoder, and Non-causal Decoder. For pretraining objective they consider, Full LM objective and masked LM objective. Perhaps intersting is this Non-causal Decoder work which has never really caught my eye before.

In conclusion, an autoregressive decoder works better if there is no fine-tuning, and the encoder-decoder architecture works better if we are allowed to fine-tune.

Applaud this effort and hope people read the conclusion section of the paper so the carbon counts for something.

layout: post title: NLP Papers at ICML2022 date: “2022-10-24” status: mathjax: true —

Towards Coherent and Consistent Use of Entities in Narrative Generation

Papalampidi, Cao, Kocisky. Deepmind

This is primarily an architecture paper which uses dynamic memory for the problem of coherent entities in text generation. The authors propose a dynamic entity memory and cross-attention blocks at each layer of the model to augment a pre-trained LM. They say that the key difference from previous work is in being able to condition on multiple relevant entities instead of just one, and update all entity slots in the dynamic memory based on soft-attention. They also define metrics for “automatically measuring entity coherence and consistency”.

I’m usually not a big fan of such work (including the line of retrieval augmented models) because I’m in the camp which believes very large LMs with lots of parameters are already implicitly working with internal memory banks and all this should come for free. Personally I believe that while more architecture engineering should give more data sample efficiency, if the architecture also comes at the cost of requiring additional supervision (instead of purely self-supervised) ultimately the adoption is temporary/patchy until the next scalable model architecture comes out.

Also not sure how interesting it is as it combines several well-known mechanisms of soft-attention, dynamic memory, gating etc but I don’t expect too much novelty - the techniques in this field are kind of getting saturated. Still a solid engineering effort nonetheless.

Background

Memory augmented LMs are a type of architecture where in addition to the base architecture of whatever LM you’re working with, you also have these additional vectors which get stored and updated as you see more input. They are usually gated for more “learning control”, and then these vectors interact with your default hidden states in some way to influence prediction.

Method

They have j memory slots, each with Key-value. The key is a fixed representation (presumably just the token) and the value is dynamic and gets updated as the model reads in the inputs.

Each layer of the transformer has a cross-attention block that takes the input representation of the token and all memory slots, and computes some representation from it using standard softmax concatenation operations. There are some gating mechanisms in various places for updating the memory value of the cell.

Finally there is a “regularisation of cross-attention scores” step where they minimise the KL Divergence between cross-attention weights and the “ground truth-distribution for that token”. (Presumably this ground-truth distribution has all probability on one token and so the soft-attentions become hard-attentions again but hey with a tuning hyperparameter on this KL loss, why not. )

layout: post title: NLP Papers at ICML2022 date: “2022-10-24” status: mathjax: true —

Improving Language Models by Retrieving from Trillions of Tokens

Platoon from DeepMind

This paper is a massive scaling up of retrieval augmented models. The paper is chock full of their training details, analysis of different drills (ablations) and battle procedures.

Interesting is the care taken to separate the train from the field (test) data (extra obvious problem since we are doing direct retrieval). They compute a 13-gram Jaccard similarity using MinHash and remove all training documents with high similarity (0.8) or higher to validation or test set document, in addition removing all validation and test articles from Wikitext 103.

There is a further section on 2.6 Quantifying dataset leakage exploitation which is kind of new.

First they chunk up evaluation and training data. For each evaluation chunk, retrieve the 10 closest nearest neighbors in the training data, and compute the longest token substring. They can then plot on increasing amounts of data overlap, how much the model log-likelihood of each chunk is which indicates how much it exploits evaluation leakage.

Nice example of how to write a Standard Operating Procedure, Salute.

layout: post title: NLP Papers at ICML2022 date: “2022-10-24” status: mathjax: true —

Self-conditioning Pre-Trained Language Models

Suau, Zappella, Apostoloff, Apple

This paper identifies “expert” units (neurons) in the Transformer LM and “activates” them. It’s an intuitive idea, one that surely many people have been thinking of in terms of LM controllability. It starts out quite promising and I was expecting something simple but elegant and easy to implement but got thrown off more than the more “complicated” papers.

Background

Just by reading the abstract you would expect that a lot of work had been done in this area so a literature review of related work is particularly relevant here. However they only give one particularly relevant reference, Radford et al., 2017 (don’t get too excited, it’s not Radford Neal).12 who do “L1 regularisation of a logistic regression classifier on top of representations”. I feel like I’ve seen a lot more related work to identifying neurons just under a different name.

Method

I found the notation strangely unclear here and the method missing some implementation details for an ICML paper. For e.g., they write “A sentence $\mathbf{x} = \{x_i\}”$ (uh .. probably missing some subscript and superscripts here..) and later “Let $z_m^c = {z_{m,i}^c}^{N}_{i=1}$ be the outputs of neuron $m$ to sentences ${\mathbf{x}_i^c}$”. I’m pretty sure that the subscript on $\mathbf{x}$ was used to index a word in earlier notation, so I’m pretty confused by this point.

“We treat $\mathbf{z}^{c}_m$ as prediction scores for the task $\mathbf{b}^c$.” What is this task? Is it a binary prediction of whether $c$ is present at position $i$ from 1 to $N$? How did we get the “prediction scores” from a single neuron? Now a little tired, I gave up.

References

-

Blum, Mitchell. 1998 Combining Labeled and Unlabeled Data with Co-Training ↩

-

Zhao, Wallace, Feng, Klein, Singh. 2021 Calibrate before use: Improving Few Shot Performance of Language Models ↩

-

Zhang, Zhou. 2011 Cotrade: Confident cotraining with data editing ↩

-

Hansen 2016. The CMA Evolution Strategy: A Tutorial ↩

-

Pang, Wu. Latent Space Energy-Based Model of Symbol-Vector Coupling for Text Generation and Classification ↩

-

Gao, Song, Poole, Wu, Kingma. Learning Energy-based Models by Diffusion Recovery LIkelihood ↩

-

Khalifa, Elsahar, Dymetman. A distributional approach to controlled text generation. ICLR2021 ↩

-

Parshakova, Andreoli, Dymetman. Distributional Reinforcement Learning For Energy-Based Sequential Models ↩

-

Caccia, Caccia, Fedus, Larochelle, Pineau, Charlin. Language GANS falling short. ↩

-

Scialom, Dray, Lamprier, Piworarski, Staiano. To Beam Or Not To Beam: That is a Question of Cooperation for Language GANs ↩

-

Norouzi, Bengio, Chen, Jaitly, Schster, Wu, Schuurmans. Reward Augmented Maximum Likelihood for Neural Structured Prediction ↩

-

Radford, Jozefowicz, Sutskever, 2017. Learning to generate reviews and discovering sentiment. ↩