NLP Papers at ICML2022

Generative Cooperative Networks for Natural Language Generation

Lamprier, Scialom, Chaffin, Claveau, Kijak, Staiano, Piwowarski. ISIR France

GANS are notoriously difficult to train for NLP applications because the reward signal is discrete (discrete word output). Because of the discrete signal, people use RL methods to optimize Language GANs which suffer from high variance and non-stationary reward distributions (Caccia et al., 2020)1. This paper hopes to change that by using a particular formulation of the generation step in the framework of Generative Cooperative Networks (e.g., SelfGANs2), which they claim avoids hacky learning schedules and has theoretical convergence guarantees.

If this actually works it’s really quite amazing because their target distribution takes on the super simple form of $q_t \propto p_{t-1}D_t$. I learnt alot from this paper because they didn’t seem to be taking any short cuts or hacks and even went above and beyond with MCTS. The paper also provides a nice discussion in relation to existing work.

Background

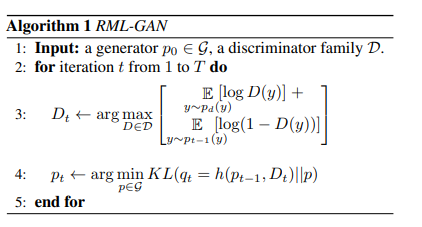

Generative Cooperative Networks are a class of GANs which uses a cooperative decoding scheme in which the discriminator network for e.g., scores beam search outputs to favor more realistic sequences. A unifying pseudocode for this class of networks is given by the authors:

Line 3 is a standard GAN Objective for training the discriminator.

Line 4 optimizes the generator distribution $p_t$ by minimising the KL divergence KL$(q_t||p_t)$, according to a fixed behavior distribution $q_t$ which is cooperative as it incorporates the discriminator $D_t.$

Reward Augmented Maximum Likelihood Training (Norouzi et al., 2016)3 proposes that we should have a generator distribution $q(x) \propto exp(\frac{f(x)}{\tau})$, where $f(x)$ consists of some reward function.

Method

Formulation of Cooperative Network. In this work, they propose to consider $f(x)$ as a cooperative (Discriminator+Generator) Network. $f(x) = \log (p_{t-1}D_t(x))$, with $p_{t-1}$ as the previous generator distribution and $D_t$ as the discriminator at step $t$ (trained on samples from $p_{t-1}$.

Appendix A, Theorem 2.1 and 2.2 give theoretical proofs on convergence which I didn’t try to follow.

Efficient Sampling Proving $q$ converges is not the end of the story, and we still need a way to sample from $q$. The authors consider nucleus sampling and mixture of sampling distributions, and also propose Monte Carlo Tree Search (MCTS). The main idea behind MCTS is that nodes in the search space gets selected and expanded, evaluated (with rollouts) and backprop to the selection phase based on the result of the rollout. The main trick here is using the discriminator to evaluate the node instead of employing a full rollout of the sequence.

References

-

Caccia, Caccia, Fedus, Larochelle, Pineau, Charlin. Language GANS falling short. ↩

-

Scialom, Dray, Lamprier, Piworarski, Staiano. To Beam Or Not To Beam: That is a Question of Cooperation for Language GANs ↩

-

Norouzi, Bengio, Chen, Jaitly, Schster, Wu, Schuurmans. Reward Augmented Maximum Likelihood for Neural Structured Prediction ↩